If you run a Laravel application purely as a headless API, you can benefit from disabling the HTTP sessions.

We use this setup for our Oh Dear monitoring service, where the remote servers that check for uptime are all headless Laravel setups.

Laravel’s default session behaviour

A default Laravel application will have HTTP sessions enabled. It does so by using the file handler and storing them in the storage/framework/sessions directory of your application.

It’s not uncommon to find several thousand session files in there.

$ pwd

storage/framework/sessions

$ ls -alh | wc -l

1155

Every new visitor (or bot) that loads your application will generate a new, unique, PHP session id.

For our API endpoints, that actually started to add up.

$ ls -alh | wc -l

33984

Over 30.000 session files and we weren’t even using sessions. All because they get enabled by default!

What’s the performance impact with sessions?

You can store sessions on file (default), Redis, Memcached, … The default file handler needs a manual cleanup though, or the older session files would never expire.

This is configured in the config/sessions.php file.

/*

|--------------------------------------------------------------------------

| Session Sweeping Lottery

|--------------------------------------------------------------------------

|

| Some session drivers must manually sweep their storage location to get

| rid of old sessions from storage. Here are the chances that it will

| happen on a given request. By default, the odds are 2 out of 100.

|

*/

'lottery' => [2, 100],

This means 2% of all requests will trigger code that will check every file in the directory and clean up the old ones. It’s garbage collection for your session files.

Doesn’t sound like much, does it, 2%?

For most applications, that’s a sensible default. Our API however gets a few million requests a day, and at that point things start to add up.

For all those API calls, 2% would loop the directory, check the age of every file, and delete it if needed. That means we have a (significant) random slowdown on 2% of all our API calls.

If you strace such a call, you can see how intensive the data operations can get.

(Strace shows system calls, or low-level calls that the php process would make.)

$ strace -p [PID] -e trace=file

[...]

[pid 12084] lstat("storage/framework/sessions/L0Ecb4JK1yeOVyEZgTmIscT8uuVOfXJE3OI174Rp", {st_mode=S_IFREG|0644, st_size=118, ...}) = 0

[pid 12084] stat("storage/framework/sessions/L0Ecb4JK1yeOVyEZgTmIscT8uuVOfXJE3OI174Rp", {st_mode=S_IFREG|0644, st_size=118, ...}) = 0

[pid 12084] access("storage/framework/sessions/L0Ecb4JK1yeOVyEZgTmIscT8uuVOfXJE3OI174Rp", F_OK) = 0

[pid 12084] lstat("storage/framework/sessions/GGjrcIYeceatB0EK9dvpqT4thFQM39PfDQFSbW6R", {st_mode=S_IFREG|0644, st_size=118, ...}) = 0

[pid 12084] stat("storage/framework/sessions/GGjrcIYeceatB0EK9dvpqT4thFQM39PfDQFSbW6R", {st_mode=S_IFREG|0644, st_size=118, ...}) = 0

[pid 12084] access("storage/framework/sessions/GGjrcIYeceatB0EK9dvpqT4thFQM39PfDQFSbW6R", F_OK) = 0

[pid 12084] lstat("storage/framework/sessions/TGi8DXmKJfT7p6YFaJ14lnHGpN2ey6ydqBpqodZv", {st_mode=S_IFREG|0644, st_size=118, ...}) = 0

[pid 12084] stat("storage/framework/sessions/TGi8DXmKJfT7p6YFaJ14lnHGpN2ey6ydqBpqodZv", {st_mode=S_IFREG|0644, st_size=118, ...}) = 0

[pid 12084] access("storage/framework/sessions/TGi8DXmKJfT7p6YFaJ14lnHGpN2ey6ydqBpqodZv", F_OK) = 0

[pid 12084] lstat("storage/framework/sessions/pvZdw0hYzvn6BdxdTslKeBTuQIeVZj8V2cV7OswA", {st_mode=S_IFREG|0644, st_size=118, ...}) = 0

[pid 12084] stat("storage/framework/sessions/pvZdw0hYzvn6BdxdTslKeBTuQIeVZj8V2cV7OswA", {st_mode=S_IFREG|0644, st_size=118, ...}) = 0

[pid 12084] access("storage/framework/sessions/pvZdw0hYzvn6BdxdTslKeBTuQIeVZj8V2cV7OswA", F_OK) = 0

[pid 12084] lstat("storage/framework/sessions/eu19GV2bGUfe1yGviTkuObRYC35mUNOLjQmsDQ3z", {st_mode=S_IFREG|0644, st_size=118, ...}) = 0

[pid 12084] stat("storage/framework/sessions/eu19GV2bGUfe1yGviTkuObRYC35mUNOLjQmsDQ3z", {st_mode=S_IFREG|0644, st_size=118, ...}) = 0

[pid 12084] access("storage/framework/sessions/eu19GV2bGUfe1yGviTkuObRYC35mUNOLjQmsDQ3z", F_OK) = 0

For every session file in the storage/framework/session directory, there are 3 system calls that need to be performed.

Multiply that by the amount of session files you have, and PHP will spend a fair amount of CPU time processing them.

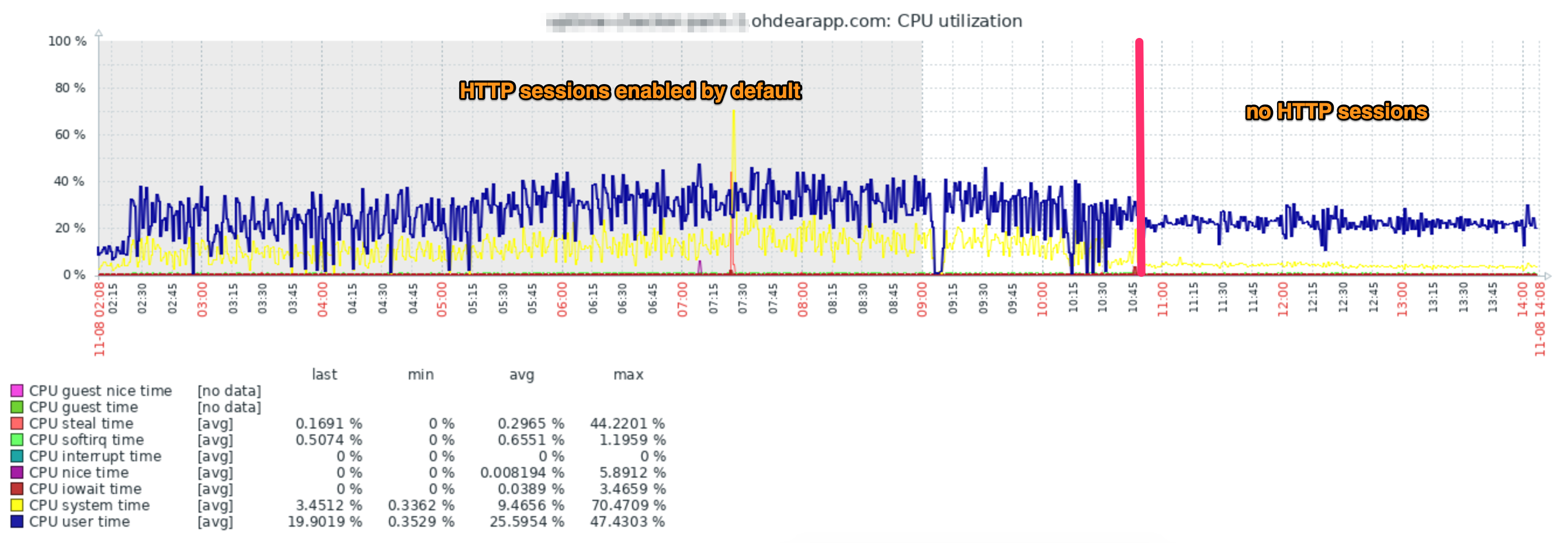

The CPU chart above is from one of our remote uptime check servers. We deployed a change that disabled HTTP sessions for our API around 10:45h.

From that point forward, our system load was much more controller and stable. We lost all random spikes in CPU because of the session cleanup that was happening.

Disabling HTTP sessions in Laravel

If you don’t need any HTTP sessions at all, disabling them is pretty easy. Modify your app/Http/Kernel.php and remove the following pieces of middleware from the protected $middlewareGroups group:

\Illuminate\Session\Middleware\StartSession::class,

\Illuminate\View\Middleware\ShareErrorsFromSession::class,

\App\Http\Middleware\VerifyCsrfToken::class,

Once done, your application will no longer start or load sessions.

Why not just switch to {memcached,redis,…}?

Yes, memcached or redis have a cleanup mechanisme of their own that would have prevented the random CPU spikes too. After all, it’s no longer PHP that has to do the cleanup anymore.

But since we don’t need sessions, I also don’t want to open a connection/socket to the session handler for every request. It’s a lot cleaner for us to just disable HTTP sessions in our API.

If you have to use the file session handler …

… it might be worth investigating if you can disable the lottery/garbage collection entirely, and run a custom worker process that does the cleanup for you.

If you disable the lottery and don’t clean up manually, the sessions will just keep on building and eventually consume all your diskspace. But taking an out of band approach to cleaning up your sessions might be a benefit there.

Your HTTP calls will no longer have random CPU spikes due to the session cleanup, since that would be handled in your custom worker.

Will this help your performance?

It would depend.

In our case, it made a significant difference because of the amount of API calls we do. If you serve less than a few thousand API calls a day, chances are you won’t be bitten by this or the impact is minimal, at best.