This is a really quick write-up on how I’ve been running HTTP/2 on my server for the last 2 months, despite having an OS that doesn’t support OpenSSL 1.0.2.

It uses a Docker container to run Nginx, built on the latest Alpine Linux distribution. This has a modern OpenSSL built-in without extra work. It uses the same Nginx configurations from the host server and uses a network binding mode that doesn’t require me to remap any ports using iptables/haproxy/…

An nginx docker container for HTTP/2

The really quick way to get started is to run this command.

$ docker run --name nginx-container --net="host" -v /etc/nginx/:/etc/nginx/ -v /etc/ssl/certs/:/etc/ssl/certs/ -v /etc/letsencrypt/:/etc/letsencrypt/ -v /var/log/nginx/:/var/log/nginx/ --restart=always -d nginx:alpine

Once it’s running, it’ll show itself as “nginx-container”:

$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS 960ee2adb381 nginx:1.11-alpine "nginx -g 'daemon off" 6 weeks ago Up 2 weeks

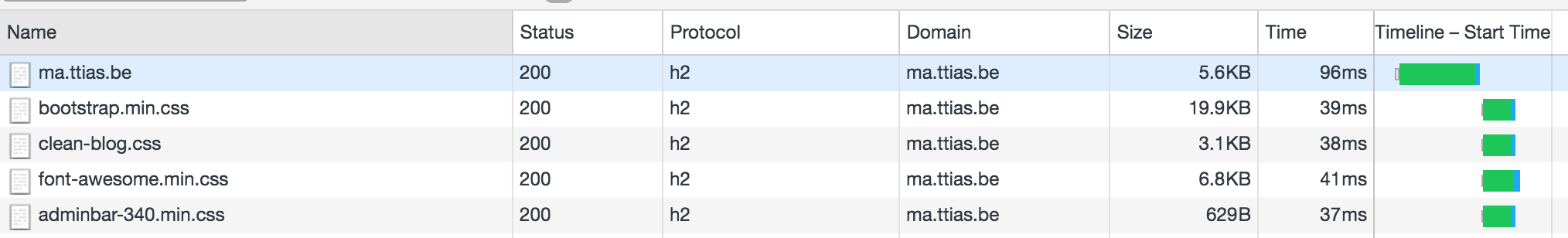

And the reason I’m doing this in the first place: to get HTTP/2 back up on Chrome after they disabled support for NPN.

Running a Docker container with those arguments does a few things that are worth pointing out.

Host networking in Docker

First: --net="host" tells the Docker daemon to not use a local network and bind to a random port (“bridge” mode), but to use the same networking model as the host on which it’s running. This means that the container wants to bind on port :443 or :80, and the host (the server running the Docker daemon) will treat it like that.

The --net="host" also makes it easier to use Nginx as a reverse proxy. All my configurations generated on my server look like this;

location / {

proxy_pass http://localhost:8080;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto https;

proxy_read_timeout 60;

proxy_connect_timeout 60;

proxy_redirect off;

}

In a default Docker container, localhost refers to that container itself, it has no idea what’s running on host on port :8080. The --net="host" also allows the Docker container to communicate back to the host, connecting to the Apache service running on port :8080 in my case (which isn’t run in a Docker container).

Add local configurations to the container

Second: multiple “-v” parameters: this adds data points in your docker container, shared from the host. In this case I’m mapping the directories /etc/nginx, /etc/ssl/certs, /etc/letsencrypt and /var/log/nginx to the docker container.

It also allows me to re-use my existing Let’s Encrypt certificates and renewal logic, as that’ll update the configurations on the host, and the container reads those.

There’s a small catch though: a change in SSL certificates needs a reload from the Nginx daemon, which now means a reload of the Docker container.

Restart container when it crashes

Third: --restart=always will cause the container to automatically restart, in case the nginx daemon running inside it would crash or segfault.

It hasn’t actually done that (yet), so it’s a sane default as far as I’m concerned.

Use the official Nginx docker image

Fourth: -d nginx:1.11-alpine uses the official docker container from Nginx, built on the Alpine distribution.

The first time you run it, it will download this image from the Docker hub and store it locally.

Config management in the container

By binding my directories on the host directly into the container, I can keep using the same config management I’ve been using for years.

For instance, my Puppet configuration will write files to /etc/nginx. Because I mapped that to the Docker container, that container gets the exact same configurations.

It’s essentially configuration management on the host, runtime in a container. This makes it considerably easier to implement Docker if you can re-use premade containers and supply it with your own configurations.

The challenges with Docker

Sure, there are plenty. Do I know what’s in that container? Not fully. Do I trust whoever made that container? Only partially. Who updates the Nginx in that container when a critical vulnerability comes around? I have no clue.

It’s a leap of faith, to be honest – time will tell if it’s worth it.