I’ve just finished migrating this website from a WordPress to a statically generated site with Hugo . It took quite a bit of preparation to make sure all my old URLs were still functioning and the content was displaying properly.

In this post I’ll share some of the methods I used to make this a smooth transition.

Put the new site online on a temp URL#

To make it a bit easier to test everything, I created a random subdomain called engo4xieloo1.ma.ttias.be and deployed the site there.

This gave me a couple of advantages;

- I can test the build process (css minification etc)

- I can share the URL with some friends to let them test things

Just beware that you don’t want to leave this online for too long as it risks getting indexed or crawled.

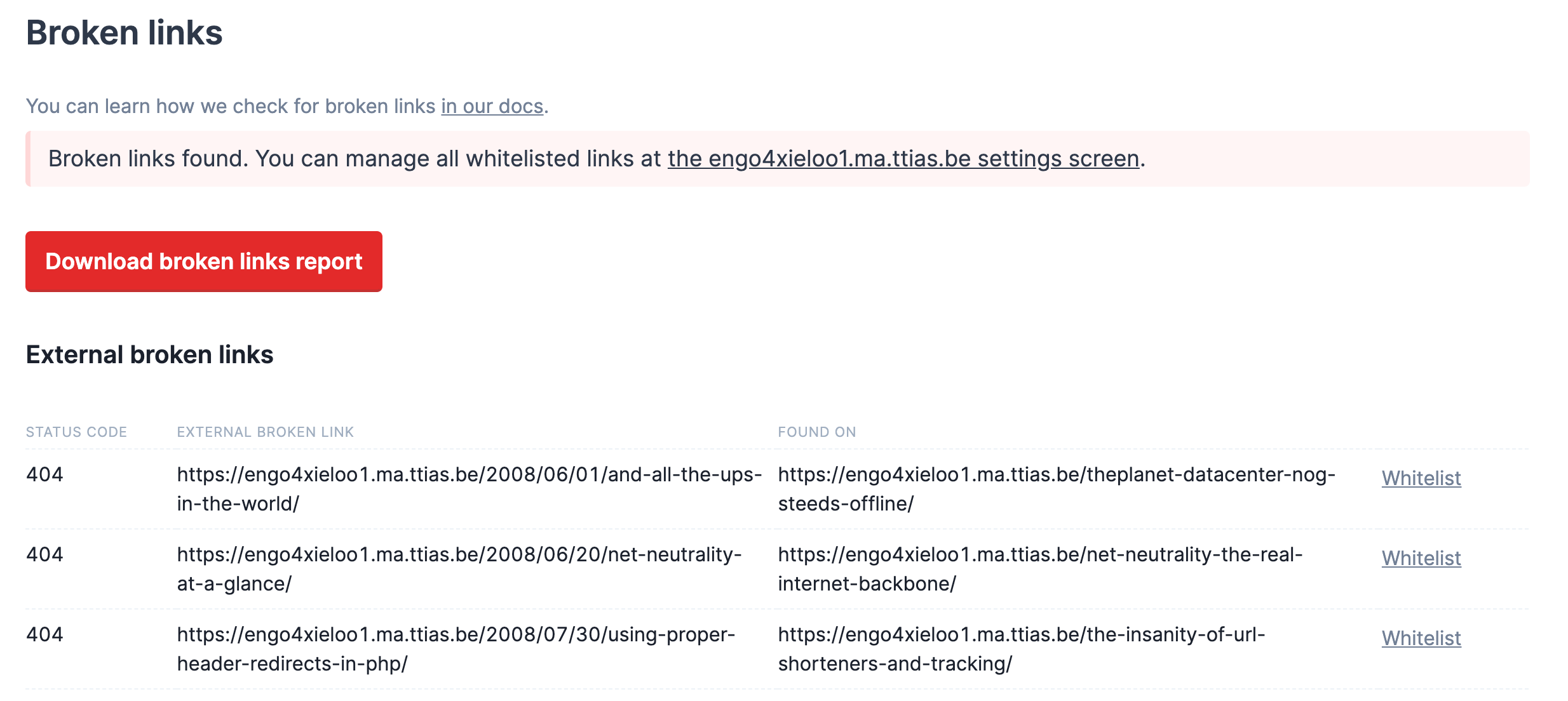

Check for broken links#

Because it’s such a big move to change websites, I want to make sure I didn’t miss anything obvious. I use Oh Dear’s broken links check to crawl my temporary URL and report any errors to me.

Boy this was a lot of work. Over 100 broken URLs!

And these are just the ones the crawler could find. Surely there are URLs floating online that won’t work anymore, either.

Check your most requested pages#

I used my servers’ access logs to find the most popular pages on my site, disregarding static content like images, CSS, javascript, …

$ awk '{print $7}' access.log |

grep -vP '\.(css|js|woff|png|jpg|ico)' |

sort |

uniq -c |

sort -n |

awk '{print $2 }' |

tail -n 500 > urls.txt

This gave me a list of my top pages.

[...]

/socks-proxy-linux-ssh-bypass-content-filters/

/changing-the-time-and-timezone-settings-on-centos-or-rhel/

/update-docker-container-latest-version/

/ssh-error-unable-negotiate-ip-no-matching-cipher-found/

/feed

/xmlrpc.php

/robots.txt

/feed/?cat=-1009

/wp-login.php

/

/feed/

Some of them could easily be ignored. The new site doesn’t have a wp-login.php or xmlrpc.php anymore - those are specific to WordPress. After a bit of manual cleanup, I had a list that I could work with. The URLs were saved to urls.txt.

Now I’ll check every URL on the new, temporary, site location.

$ cat urls.txt |

while read url; do

>&2 echo -n "$url: ";

curl -s --show-error --fail https://engo4xieloo1.ma.ttias.be$url > /dev/null;

>&2 echo "";

done

2> result.txt

In my result.txt file I now have a list of every page that was visited on my old site and the error it triggered on the new site.

$ grep 404 result.txt

/feed-links: curl: (22) The requested URL returned error: 404 Not Found

/page/2/?s=dns: curl: (22) The requested URL returned error: 404 Not Found

/page/2/?s=http: curl: (22) The requested URL returned error: 404 Not Found

[...]

An actionable list to fix!

For the most important URLs, I set up additional redirect rules. Some errors I could ignore, because the functionality has simply been removed in the new site.

Verify the URLs on the new website#

Once you’ve actually deployed the new site, it’s time to watch the logs again. This time, to check if there are external sites linking to your site with outdated URLs.

$ grep -vP ' (20[0-9]{1}|30[0-9]{1}) ' access.log |

awk '{print $7}' |

sort |

uniq -c |

sort -nc

The grep above lists me every request in the access log that doesn’t have a 20x or 30x status code. In other words: if it wasn’t served or redirected, I’d want to know.

This gave yet another list of URLs to check, this time external requests making it to the new site. This will also result in a set of redirects to be configured to catch the important content.

[...]

12 /why-do-we-automate/feed/

13 /projects/feed/

28 /xmlrpc.php

29 /patch-your-webservers-for-the-sslv3-poodle-vulnerability-cve%C2%AD-2014%C2%AD-3566/

31 /technical-guide-seo/downloader/index.php

67 /blog/feed/

100 /rss-feeds/feed/

115 /wp-login.php

Not everthing needs to be handled, but I found a couple of important URLs to add to my redirect list.

Your server logs are valuable!#

All this to say: your server-side logs are pretty valuable. They’ll catch and see the requests that aren’t logged to Google Analytics or other trackers.

It’s worth having a look at them. 😄