Every Sunday, I send out a newsletter called cron.weekly to over 10.000 subscribers. In this post, I’ll do a deep-dive into how those mails get delivered to subscribers.

Let’s talk tech, workflows and curation! 🔥

Gathering interesting links#

The first (and arguably, most important) thing to do is collect all the links to include in the newsletter.

Self-found articles#

Throughout the week, I bookmark every interesting link I see using the Pocket extension .

A simple click in the browser and it’s bookmarked for later processing. I’m pretty liberal about what I bookmark. At this stage, I don’t have to read every article. If it looks interesting, I’ll bookmark it - curation happens later.

Submitted by readers#

Quite often I get a reply to a newsletter I send, where a reader has some follow-up or a different point of view from what I wrote. This usually comes with a couple of links for me to check out, and chances are that feedback makes it in next weeks’ issue.

Side note: if you have an interesting article, do feel free to send it to me either via Twitter

(my DMs are open) or via email at [email protected].

Processing the links#

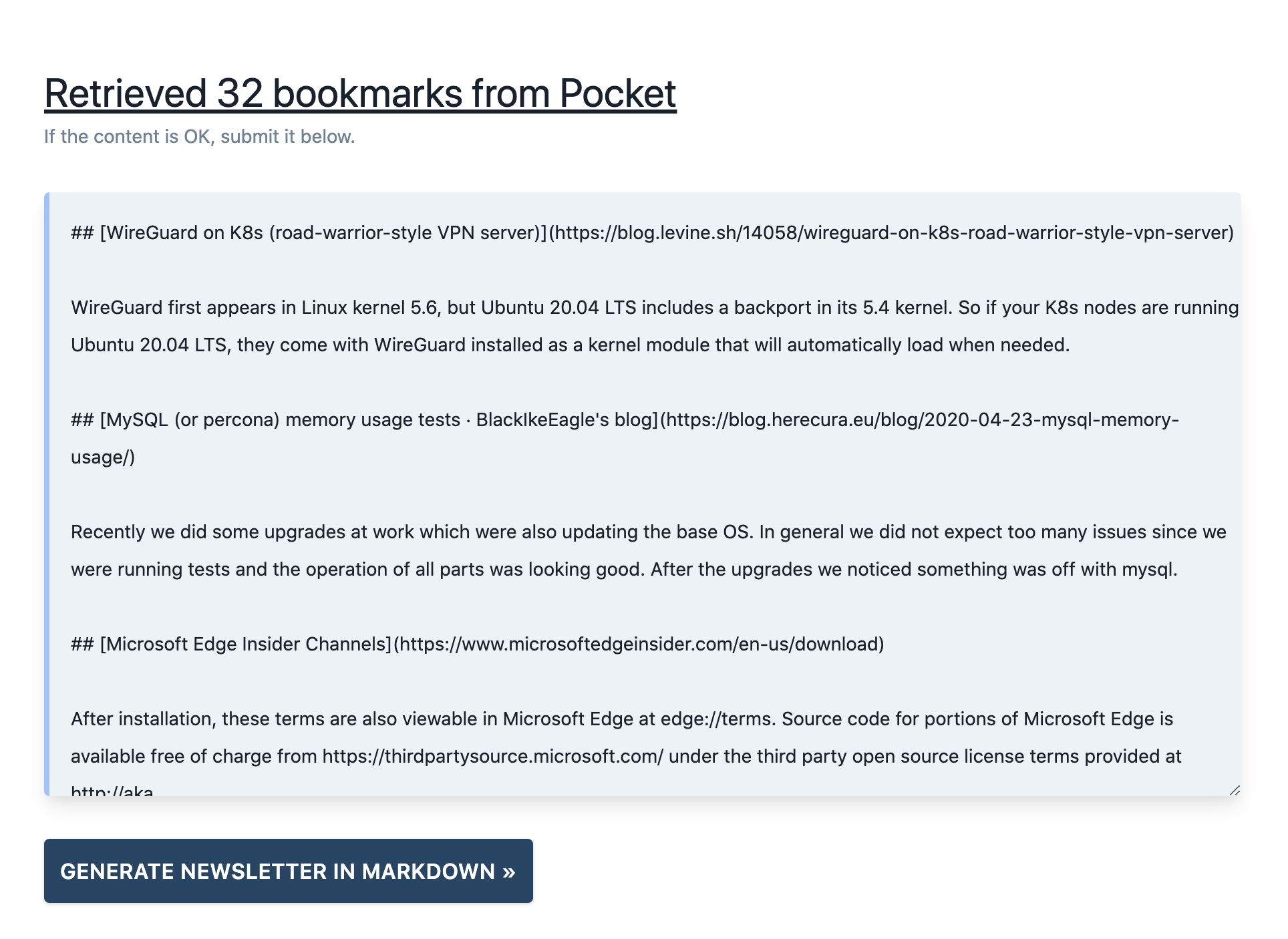

I wrote a small script that talks to the Pocket API, retrieves all the links and formats it in Markdown for me. It’s really only useful to me, but it’s available on Github if you’re interested: mattiasgeniar/generator.cronweekly.com-v2 .

Mind you, it isn’t the prettiest code either - but it’s good enough for me.

The result is a suggestion of newsletter content, formatted in a way I can work with.

This saves me a lot of time preparing the syntax of the newsletter, as it’s all written in Markdown. Pocket also has a decent summary of each article, that I only have to tweak slightly.

Writing the web-version first#

With the Pocket suggestion available in Markdown format, now begins the most time-intensive part: writing.

While Pocket makes good suggestions for the description of projects, it doesn’t quite work for items in the news- or tutorials section.

At this point, I haven’t read every link yet (I bookmark a lot, often just based on the title alone), about 50% gets thrown away in this step.

This is the stage that takes me 2-3 hours. Half of it is spent typing, the other half is reading the articles.

Generating the HTML & TXT from the web-version#

This is a step that might seem a bit backwards, but it works for my workflow: once I have the web-version available, I will use that to generate a different version for the HTML and TXT view of the newsletter.

The input for the conversion is the Markdown file that hugo parses . It looks like this:

---

title: 'cron.weekly issue #131: Ubuntu 20.04, Moloch, eBPF, xsv, desed & more'

author: mattias

date: 2020-04-26T06:50:00+01:00

publishDate: 2020-04-26T06:50:00+01:00

url: /cronweekly/issue-131/

---

Hi everyone! 👋

Welcome to cron.weekly issue #131.

Last week ...

I wrote a PHP converter and use it as such:

$ ./generate-newsletter-mail.sh -t | pbcopy

$ ./generate-newsletter-mail.sh -h | pbcopy

It generates the TXT version (-t flag) or the HTML version (-h flag) and copies the output to my clipboard using pbcopy on Mac.

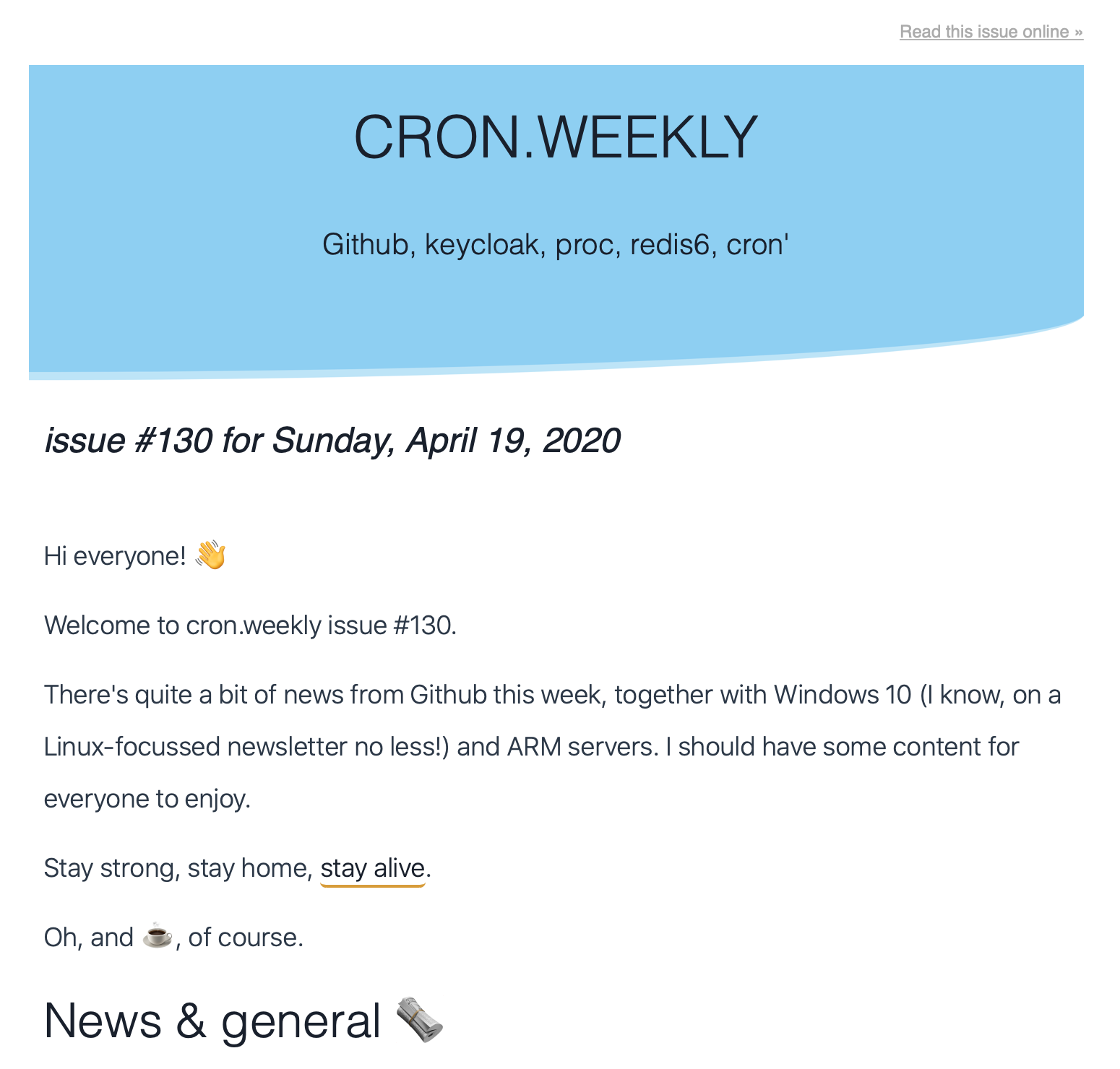

I’ve spent a considerable amount of time optimizing both views to look good in any e-mail client. The script above generates this HTML output:

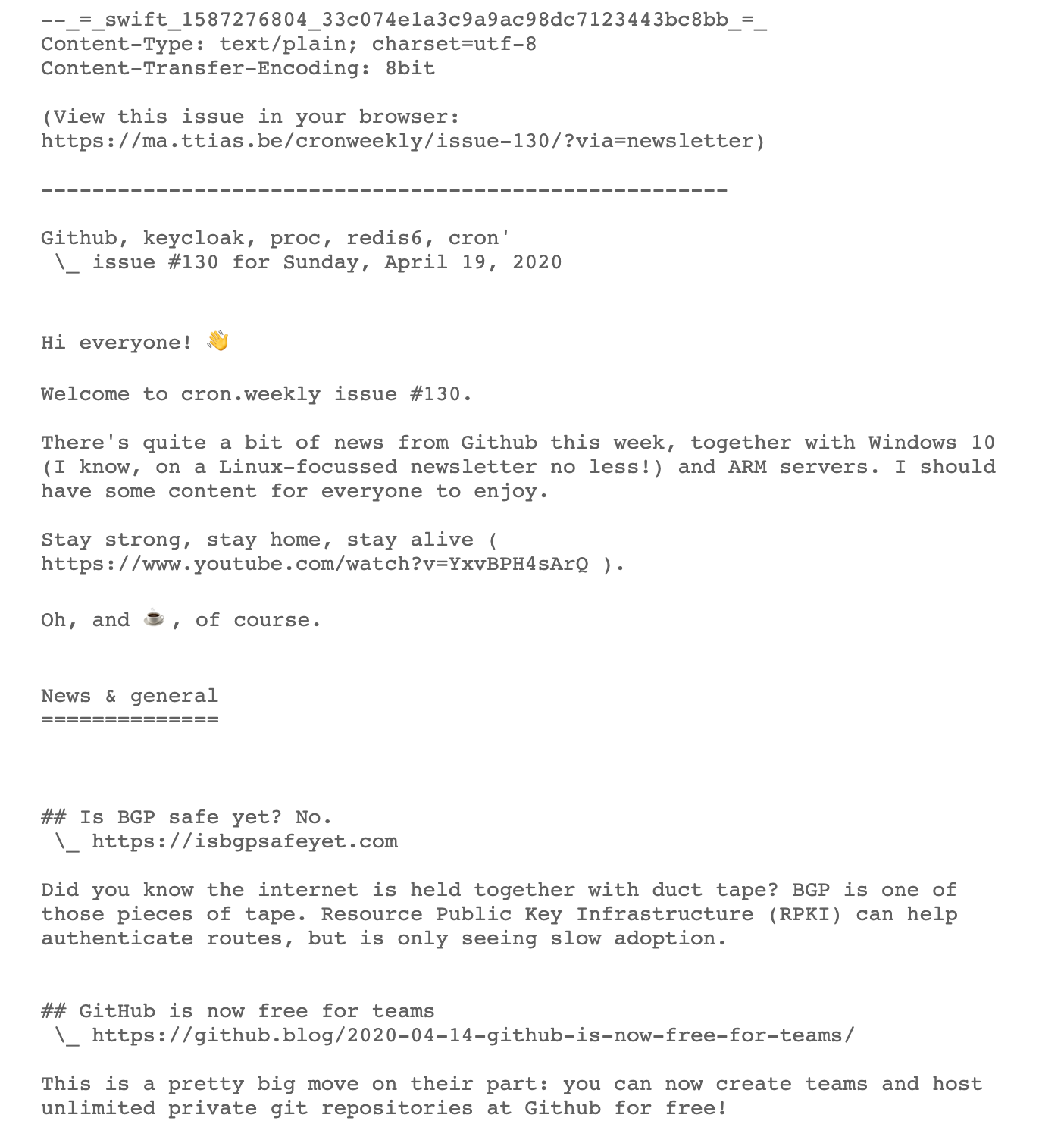

Because the audience is very technical, a surprising amount of users read it in Mutt , a text-only client. They get this version delivered to them:

I tried experimenting with more ASCII art, but it renders differently on every client (since it uses your chosen font) so I dropped it altogether. Clean & simple.

3 versions are now ready#

At this point, I have 3 output versions of the newsletter ready.

- The web version gets published in the archives and the RSS feed

- The HTML version for the graphical users

- The TXT version for the die-hard fans

The web-version gets published automatically at the right time, using Hugo’s publishDate variable and a cronjob on the server, that regenerates the site every hour.

$ crontab -l

55 * * * * cd ma.ttias.be/repo; ./update.sh

The hourly cronjob runs at 5 minutes before the hour, so the newsletter is generated on time before the mailing goes out a few minutes later.

Sending the newsletter#

There are two important components to sending out the newsletter: the client and the MTA.

Using Mailcoach#

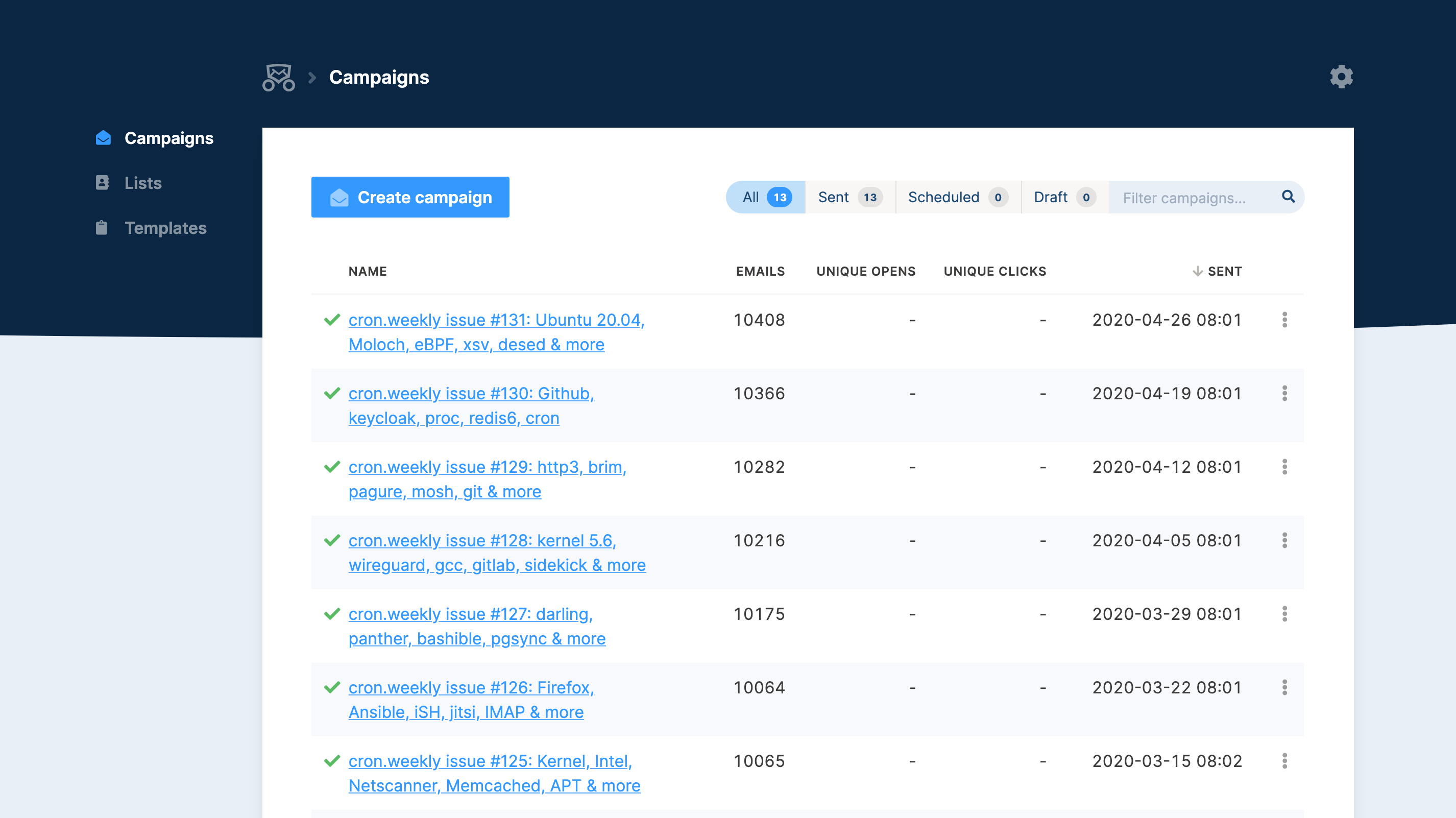

I use a PHP tool called Mailcoach that lets me configure the newsletter, set the HTML and TXT body and send them to all subscribers.

In order to collect new e-mail signups, I embed the Mailcoach form on this (static) website. The POST target of the form you see on the cron.weekly archive pages points to Mailcoach, which then stores the e-mail addresses and verifies the double opt-in.

(Go give it a try, I swear it works. 😉 )

The web version of Mailcoach allows me to see previous campaigns, monitor the list growth etc.

I strongly believe in no-tracking , so there are no open- or click-rates to be shown here. Mailcoach supports that, but I don’t want to track users. 😄

I switched from using Sendy to Mailcoach because it allows me to easily extend it, which comes in handy a bit further down.

Using Mailgun#

Mailcoach, just like Sendy, can send the e-mails using Amazon SES, Mailgun, Sendmail, … Since I recently got kicked off Amazon SES for getting too many bounces after I restarted the newsletter (which was my own fault), I switched to using Mailgun instead.

They’re pretty fast, the newsletter gets sent out in just under 10 minutes to about 10.000 subscribers.

I recently upgraded the Mailgun plan to the Growth plan for $80/month, which gives me longer log retention and a fixed IP address.

Since I’m now paying for Mailgun, I’ve been switching all my projects over one-by-one, including the outgoing e-mail from Gmail (a step-by-step plan to moving away from Google products, but that’s for another time).

Automating the tweets#

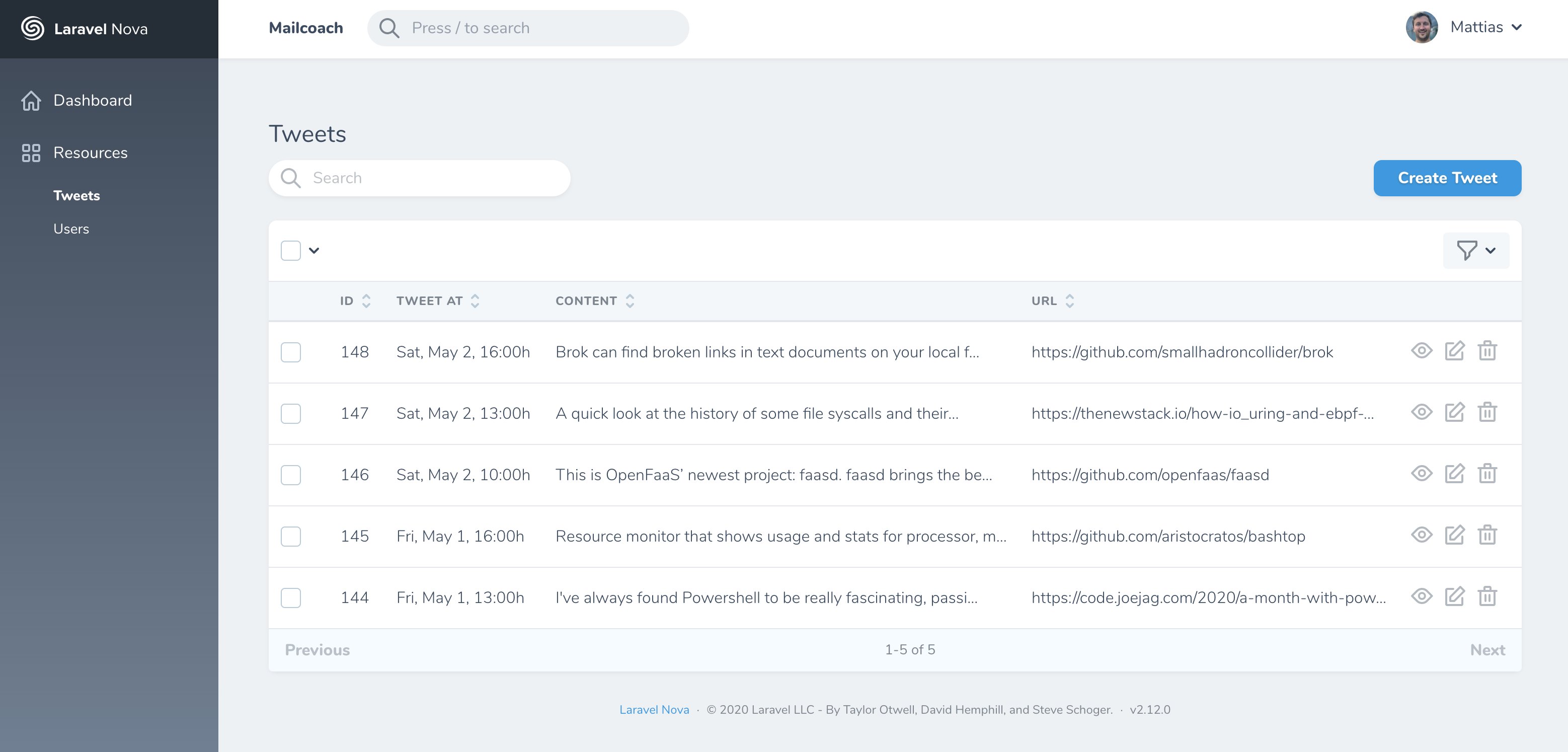

A few weeks ago, I extended the Mailcoach app with Laravel Nova that gives me a useful admin-section straight out of the box.

I wrote some extra code to parse the latest newsletter (the TXT version), extract all the links and my comments on them and then randomly takes 20 links to share out on Twitter spread over the next week.

This step is mostly fully-automated. I sometimes tweak the copy a bit to make more sense in a Tweet. They are all suffixed with #CronWeekly, so users can mute these if they follow me

and don’t want to see the newsletter repeated to them on their timeline.

The entire workflow#

When sketched out, the flow looks like this:

Lots of layers building on top of other layers, modifying the content in every step of the way. It’s a bit like a complex Unix pipeline, isn’t it?

GOTO 1;#

And once that’s done, the whole process starts again: collect new links, curate them, send them out. 😄

I regretted the weekly deadline a few years ago when I needed a break and was near burn-out, but now that I’ve quit my full-time job to focus on Oh Dear , DNS Spy and the newsletter I find it a lot more enjoyable again.

Now that the user base is large enough, I can add sponsorships that help pay the bills.

If you have any other questions about running a newsletter or the business behind it, feel free to reach out !